The question is who decides what is a “conspiracy narrative” and what is not. When a system claims to always be right, one should become suspicious. Already Socrates said: “I know that I know nothing”. The only thing that is fact and certain is that we die.

Science is a dynamic activity. In the past, AI would have been considered a joke. In the past, even the Internet would have been considered a danger, even though it now accompanies us every day. Things that today turn out to be right can turn out to be wrong years later.

The real danger is the arrogance of mankind not the AI itself. I see it as a tool like money. It is humanity itself that decides whether AI should harm or help others.

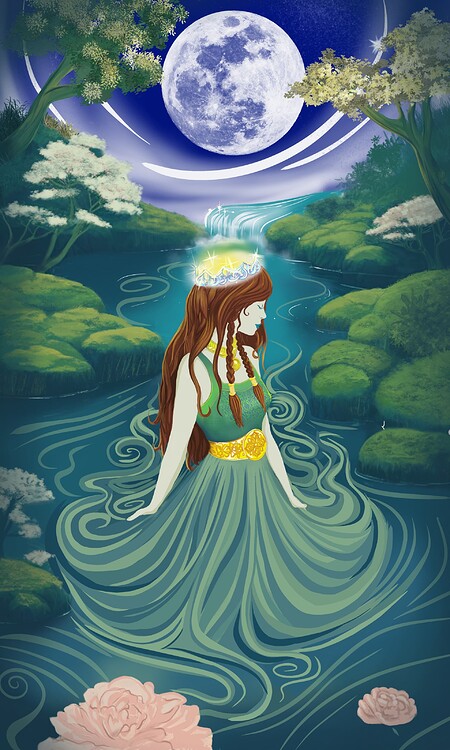

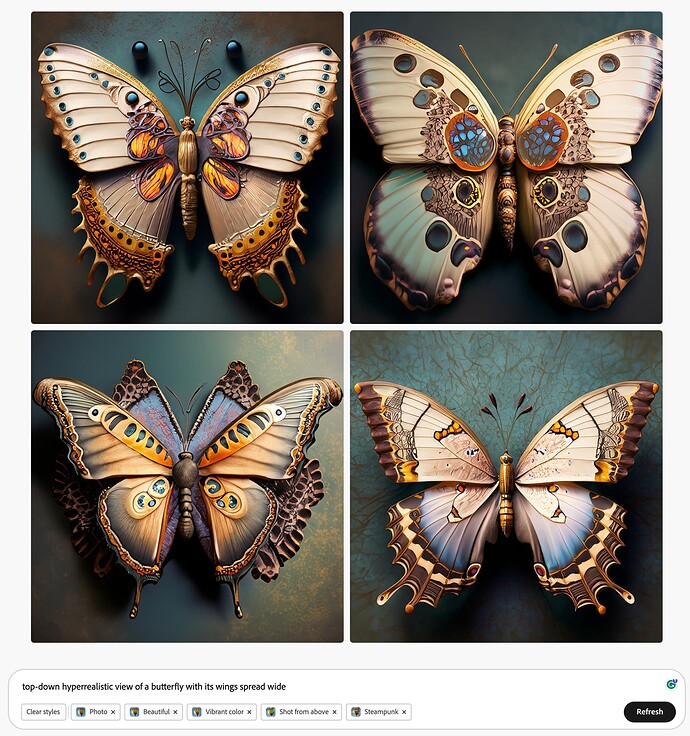

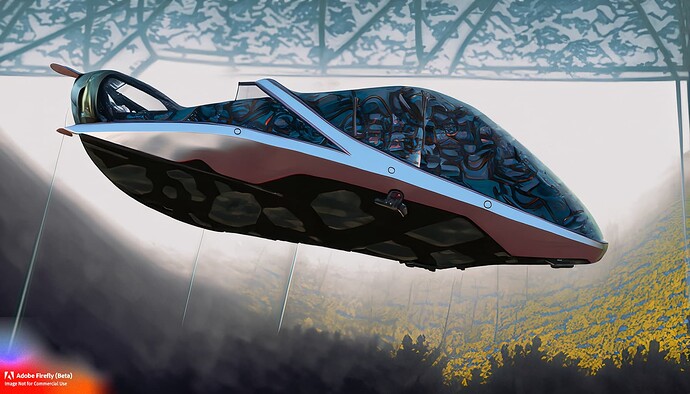

AI is not perfect. At least not yet and I think it will be hard to generate an image that makes sense. The images look like dreams. They make sense up to a certain point. Especially when we talk about certain details of a picture. I myself for example do a lot of illustrations and the AI helps me to find ideas and also to draw. What I also do sometimes is to completely edit certain AI images to make them make sense.

AI:

Edit.